I’ve lately gotten in the habit of writing aesthetically pleasing ebooks with LaTeX for myself on new subjects that I come across. This way, I can (1) learn more about TeX (always a huge bonus) and (2) create documents that are stable, portable (PDF output), beautiful, and printer-friendly. (I also put all the .tex and accompanying makefiles into version control (git) and sync it across all of my computers, to ensure that they last forever.)

One of those new subjects for me right now is the Haskell programming language. I started copying down small code snippets from various free resources on the web, with the help of the Listings package (actually named the lstlisting package internally).

The Problem

Unfortunately, I got tired of referencing line numbers manually to explain the code snippets, like this:

\documentclass[twoside]{article}

\usepackage[x11names]{xcolor} % for a set of predefined color names, like LemonChiffon1

\newcommand{\numold}[1]{{\addfontfeature{Numbers=OldStyle}#1}}

\lstnewenvironment{csource}[1][]

{\lstset{basicstyle=\ttfamily,language=C,numberstyle=\numold,numbers=left,frame=lines,framexleftmargin=0.5em,framexrightmargin=0.5em,backgroundcolor=\color{LemonChiffon1},showstringspaces=false,escapeinside={(*@}{@*)},#1}}

{}

\begin{document}

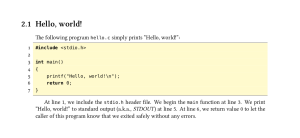

\section{Hello, world!}

The following program \texttt{hello.c} simply prints ``Hello, world!'':

\begin{csource}

#include <stdio.h>

int main()

{

printf("Hello, world!\n");

return 0;

}

\end{csource}

At line 1, we include the \texttt{stdio.h} header file. We begin the \texttt{main} function at line 3. We print ``Hello, world!'' to standard output (a.k.a., \textit{STDOUT}) at line 5. At line 6, we return value 0 to let the caller of this program know that we exited safely without any errors.

\end{document}

Output (compiled with the xelatex command):

This is horrible. Any time I add or delete a single line of code, I have to manually go back and change all of the numbers. On the other hand, I could instead use the \label{labelname} command inside the lstlisting environment on any particular line, and get the line number of that label with \ref{comment}, like so:

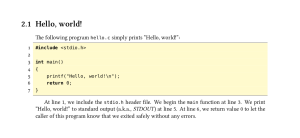

\section{Hello, world!}

The following program \texttt{hello.c} simply prints ``Hello, world!'':

\begin{csource}

#include <stdio.h>(*@\label{include}@*)

int main()(*@\label{main}@*)

{

printf("Hello, world!\n");(*@\label{world}@*)

return 0;(*@\label{return}@*)

}

\end{csource}

At line \ref{include}, we include the \texttt{stdio.h} header file. We begin the \texttt{main} function at line \ref{main}. We print ``Hello, world!'' to standard output (a.k.a., \textit{STDOUT}) at line \ref{world}. At line \ref{return}, we return value 0 to let the caller of this program know that we exited safely without any errors.

Output:

(The only difference is that the line number references are now red. This is because I also used the hyperref package with the colorlinks option.)

But I felt like this was not a good solution at all (it was suggested by the official lstlisting package’s manual, Section 7 “How tos”). For one thing, I have to think of a new label name for every line I want to address. Furthermore, I have to compile the document twice, because that’s how the \label command works. Yuck.

Enlightenment

So again, with my internet-research hat on, I tried to figure out a better solution. The first thing that came to mind was how the San Francisco-based publisher No Starch Press displayed source code listings in their newer books. Their stylistic approach to source code listings is probably the most sane (and beautiful!) one I’ve come across.

After many hours of tinkering, trial-and-error approaches, etc., I finally got everything working smoothly. Woohoo! From what I can tell, my rendition looks picture-perfect, and dare I say, even better than their version (because I use colors, too). Check it out!

\documentclass{article}

\usepackage[x11names]{xcolor} % for a set of predefined color names, like LemonChiffon1

\newcommand{\numold}[1]{{\addfontfeature{Numbers=OldStyle}#1}}

\usepackage{libertine} % for the pretty dark-circle-enclosed numbers

% Allow "No Starch Press"-like custom line numbers (essentially, bulleted line numbers for only those lines the author will address)

\newcounter{lstNoteCounter}

\newcommand{\lnnum}[1]

{\ifthenelse{#1 = 1}{\libertineGlyph{uni2776}}

{\ifthenelse{#1 = 2}{\libertineGlyph{uni2777}}

{\ifthenelse{#1 = 3}{\libertineGlyph{uni2778}}

{\ifthenelse{#1 = 4}{\libertineGlyph{uni2779}}

{\ifthenelse{#1 = 5}{\libertineGlyph{uni277A}}

{\ifthenelse{#1 = 6}{\libertineGlyph{uni277B}}

{\ifthenelse{#1 = 7}{\libertineGlyph{uni277C}}

{\ifthenelse{#1 = 8}{\libertineGlyph{uni277D}}

{\ifthenelse{#1 = 9}{\libertineGlyph{uni277E}}

{\ifthenelse{#1 = 10}{\libertineGlyph{uni277F}}

{\ifthenelse{#1 = 11}{\libertineGlyph{uni24EB}}

{\ifthenelse{#1 = 12}{\libertineGlyph{uni24EC}}

{\ifthenelse{#1 = 13}{\libertineGlyph{uni24ED}}

{\ifthenelse{#1 = 14}{\libertineGlyph{uni24EE}}

{\ifthenelse{#1 = 15}{\libertineGlyph{uni24EF}}

{\ifthenelse{#1 = 16}{\libertineGlyph{uni24F0}}

{\ifthenelse{#1 = 17}{\libertineGlyph{uni24F1}}

{\ifthenelse{#1 = 18}{\libertineGlyph{uni24F2}}

{\ifthenelse{#1 = 19}{\libertineGlyph{uni24F3}}

{\ifthenelse{#1 = 20}{\libertineGlyph{uni24F4}}

{NUM TOO HIGH}}}}}}}}}}}}}}}}}}}}}

\newcommand*{\lnote}{\stepcounter{lstNoteCounter}\vbox{\llap{{\lnnum{\thelstNoteCounter}}\hskip 1em}}}

\lstnewenvironment{csource2}[1][]

{

\setcounter{lstNoteCounter}{0}

\lstset{basicstyle=\ttfamily,language=C,numberstyle=\numold,numbers=right,frame=lines,framexleftmargin=0.5em,framexrightmargin=0.5em,backgroundcolor=\color{LemonChiffon1},showstringspaces=false,escapeinside={(*@}{@*)},#1}

}

{}

\begin{document}

\section{Hello, world!}

The following program \texttt{hello.c} simply prints ``Hello, world!'':

\begin{csource2}

(*@\lnote@*)#include <stdio.h>

/* This is a comment. */

(*@\lnote@*)int main()

{

(*@\lnote@*) printf("Hello, world!\n");

(*@\lnote@*) return 0;

}

\end{csource2}

We first include the \texttt{stdio.h} header file \lnnum{1}. We then declare the \texttt{main} function \lnnum{2}. We then print ``Hello, world!'' to standard output (a.k.a., \textit{STDOUT}) \lnnum{3}. Finally, we return value 0 to let the caller of this program know that we exited safely without any errors \lnnum{4}.

\end{document}

Output (compiled with the xelatex command):

Ah, much better! This is pretty much how No Starch Press does it, except that we’ve added two things: the line number count on the right hand side, and also a colored background to make the code stand out a bit from the page (easier on the eyes when quickly skimming). These options are easily adjustable/removable to suit your needs (see line 33). For a direct comparison, download some of their sample chapter offerings from Land of Lisp (2010) and Network Flow Analysis (2010) and see for yourself.

Explanation

If you look at the code, you can see that the only real thing that changed in the listing code itself is the use of a new \lnote command. The \lnote command basically spits out a symbol, in this case whatever the \lnnum command produces with the value of the given counter, lstNoteCounter. The \lnnum command is basically a long if/else statement chain (I tried using the switch statement construct from here, but then extra spaces would get added in), and can produce a nice glyph, but only up to 20 (for anything higher, it just displays the string “NUM TOO HIGH.” This is because it uses the Linux Libertine font to create the glyph (with \libertineGlyph), and Libertine’s black-circle-enclosed numerals only go up to 20). A caveat: Linux Libertine’s LaTeX commands are subject to change without notice, as it is currently in “alpha” stage of development (e.g., the \libertineGlyph command actually used to be called the \Lglyph command, if I recall correctly).

The real star of the show is the \llap command. I did not know about this command until I stumbled on this page yesterday. For something like an hour I toiled over trying to use \marginpar or \marginnote (the marginnote package) to get the same effect, without success (for one thing, it is impossible to create margin notes on one side (e.g., always on the left, or always on the right) if your document class is using the twoside option.

The custom \numold command (used here to typeset the line numbers with old style figures) is actually a resurrection of a command of the same name from an older, deprecated Linux Libertine package. The cool thing about how it’s defined is that you can use it with or without an argument. Because of this \numold command and how it’s defined, you have to use the XeTeX engine (i.e., compile with the xelatex command).

In all of my examples above, the serif font is Linux Libertine, and the monospaced font is DejaVu Sans Mono.

Other Thoughts

You may have noticed that I have chosen to use my own custom lstlisting environment (with the \lstnewenvironment command). The only reason I did this is because I can specify a custom command that starts up each listing environment. In my case, it’s \setcounter{lstNoteCounter}{0}, which resets the notes back to 0 for the listing.

Feel free to use my code. If you make improvements to it, please let me know! By the way, Linux Libertine’s latex package supports other enumerated glyphs, too (e.g., white-circle-enclosed numbers, or even circle-enclosed alphabet characters). Or, you could even use plain letters enclosed inside a \colorbox command, if you want. You can also put the line numbers right next to the \lnote numbers (we just add numbers=left to the lstnewenvironment options, and change \lnote’s hskip to \2.7em; I also changed the line numbers to basic italics):

\lstnewenvironment{csource2}[1][]

{\lstset{basicstyle=\ttfamily,language=C,numberstyle=\itshape,numbers=left,frame=lines,framexleftmargin=0.5em,framexrightmargin=0.5em,backgroundcolor=\color{LemonChiffon1},showstringspaces=false,escapeinside={(*@}{@*)},#1}}

{\stepcounter{lstCounter}}

\newcommand*{\lnote}{\stepcounter{lstNoteCounter}\llap{{\lnnum{\thelstNoteCounter}}\hskip 2.7em}}

Output:

Or we can swap their positions (by using the numbersep= command to the lstnewenvironment declaration, and leaving \lnnote as-is with just 1em of \hskip):

\lstnewenvironment{csource2}[1][]

{\lstset{basicstyle=\ttfamily,language=C,numberstyle=\itshape,numbers=left,numbersep=2.7em,frame=lines,framexleftmargin=0.5em,framexrightmargin=0.5em,backgroundcolor=\color{LemonChiffon1},showstringspaces=false,escapeinside={(*@}{@*)},#1}}

{\stepcounter{lstCounter}}

Output:

The possibilities are yours to choose.

In case you’re wondering, the unusual indenting of the section number into the left margin in my examples is achieved as follows:

\usepackage{titlesec}

\titleformat{\section}

{\Large\bfseries} % formatting

{\llap{

{\thesection}

\hskip 0.5em

}}

{0em}% horizontal sep

{}% before

It’s a simplified version of the code posted on the StackOverflow link above.

NB: The \contentsname command (used to render the “Contents” text if you have a Table of Contents) inherits the formatting from the \chapter command (i.e., if you use \titleformat{\chapter}). Thus, if you format the \chapter command like the \section command above with \titleformat, the only way to prevent this inheritance (if it is not desired) is by doing this:

\renewcommand\contentsname{\normalfont Contents}

This way, your Table of Content’s title will stay untouched by any \titleformat command.

UPDATE December 7, 2010: Some more variations and screenshots.